Introduction

One technology that has gained significant attention and transformed the way applications are built and deployed is Docker containers. As developers strive for more efficient and scalable solutions, understanding the intricacies of Docker and its benefits becomes essential.

Throughout my years of developing enterprise-level solutions, I have recognized the need to gain a comprehensive understanding of Docker's technology stack. Docker has the potential to revolutionize the way we build and deploy software solutions at scale.

In this article, we delve into the world of Docker containers, exploring what they are, how they benefit developers, and the potential drawbacks to consider. We will explore the effective use of Docker in enterprise-level codebases and uncover the advantages it brings to the table.

So let's dive in and explore the fascinating world of Docker containers, uncovering their benefits, drawbacks, and effective use in enterprise-level codebases.

In the fast-paced world of software development, staying ahead of the curve is crucial for success.

What is Docker?

What we now know as Docker started in 2008 as a platform as a service (PaaS), DotCloud, by Solomon Hykes in Paris, before pivoting in 2013 to focus on democratizing the underlying software containers its platform was running on.

Containers are packages of software that contain all of the necessary elements to run in any environment. In this way, containers virtualize the operating system and run anywhere, from a private data center to the public cloud or even on a developer's laptop.

Docker is a containerization software platform that allows isolating an application from the underlying host machine infrastructure to build, test, and deploy quickly.

The Evolution of Containerization

Containerization has undergone a significant evolution since its inception in 2000. In the early days, containerization was primarily used for server consolidation and virtualization. However, with the release of Docker in 2013, containerization became more popular and accessible to developers, leading to the rapid adoption of containers for software development and deployment.

The evolution of containerization can be divided into the following phases:

Early containerization: In the early 2000s, containerization technology was used mainly for server consolidation. FreeBSD jail, Linux-V server project, and Solaris Zones were some of the first projects to introduce containerization. These early containerization solutions were primarily used for virtualization and sandboxing.

Development of generic process containers: In 2007, Google released a research paper on generic process containers, which laid the foundation for containerization technology. The paper introduced the idea of a lightweight, fast, and secure method of isolating processes.

Introduction of LXC: In 2008, the LXC (Linux Containers) project was released, providing a user-friendly interface for managing containers. LXC was an important step in the evolution of containerization as it made containerization technology more accessible to developers.

The arrival of Docker: Docker was released in 2013 and quickly gained popularity among developers. Docker was designed to simplify containerization by providing a user-friendly interface for managing containers. Docker also introduced the concept of container images, which made it easy to share and distribute containers.

Container orchestration: With the widespread adoption of containers, managing and orchestrating them became a challenge. Several container orchestration tools were introduced, including Kubernetes, Docker Swarm, and Mesos. These tools made it easier to manage and scale containers.

Evolution of container runtimes: In recent years, several new container runtimes have emerged, including Kata Containers, gVisor, and Firecracker. These runtimes provide additional security and isolation for containers.

The inception of Docker

The inception of Docker and the rise of containerization as a mainstream technology has significantly transformed the software development and deployment landscape. Docker, which was announced by DotCloud in 2013, played a pivotal role in popularizing containers and making them accessible to developers. Here is an overview of the inception of Docker and its impact:

In 2013, DotCloud, a platform-as-a-service (PaaS) company, announced the Docker project. The initial release of Docker focused on providing a simple and efficient way to package and distribute applications in lightweight containers.

Docker introduced a layered file system and an image format that allowed developers to create portable and reproducible application environments. This innovation made it easier to share, deploy, and scale applications across different environments.

The key breakthrough that Docker brought to containerization was its use of container images. Docker introduced a declarative and version-controlled approach to building images, making it easy for developers to define the dependencies and configurations required for their applications.

Docker also introduced a user-friendly command-line interface and an extensive ecosystem of tools and services around containerization. This made it easier for developers to work with containers, build containerized applications, and manage container deployments.

The open-source nature of Docker allowed for a vibrant community to form around it. This community contributed to the rapid adoption and evolution of Docker, leading to the creation of numerous Docker images and the development of containerization best practices.

Docker's popularity grew rapidly due to its ability to provide lightweight, isolated, and portable application environments. Developers embraced Docker for its ease of use, flexibility, and ability to streamline the development and deployment processes.

The success of Docker prompted other containerization technologies, such as Kubernetes, to integrate and support Docker containers. Kubernetes, an open-source container orchestration platform, emerged as the de facto standard for managing containerized applications.

Docker's impact extended beyond development environments and into production deployments. Containers became a fundamental building block for microservices architectures, enabling scalability, agility, and efficient resource utilization.

In summary, Docker's introduction in 2013 marked a turning point in containerization technology. Its user-friendly approach, emphasis on container images, and thriving ecosystem played a significant role in driving the widespread adoption of containers and revolutionizing software development and deployment practices.

Docker Components

Docker comprises Dockerfile, container images, the Docker run utility, Docker Hub, Docker Engine, Docker Compose, and Docker Desktop.

Dockerfile. A Dockerfile is a simple text file that each Docker container starts with that allows one to write instructions to create a customized Docker image including the operating system, languages, environmental variables, file locations, network ports, and any other components it needs to run.

Docker image. A Docker image is a portable, read-only, executable file containing the instructions for creating a container and the specifications for which software components the container will run and how.

Docker run utility. Docker’s run utility is the command that launches a container. Each container is an instance of an image, and multiple instances of the same image can be run simultaneously.

Docker Hub. Docker Hub is a repository where container images can be stored, shared, and managed. Think of it as Docker’s version of GitHub, but specifically for containers.

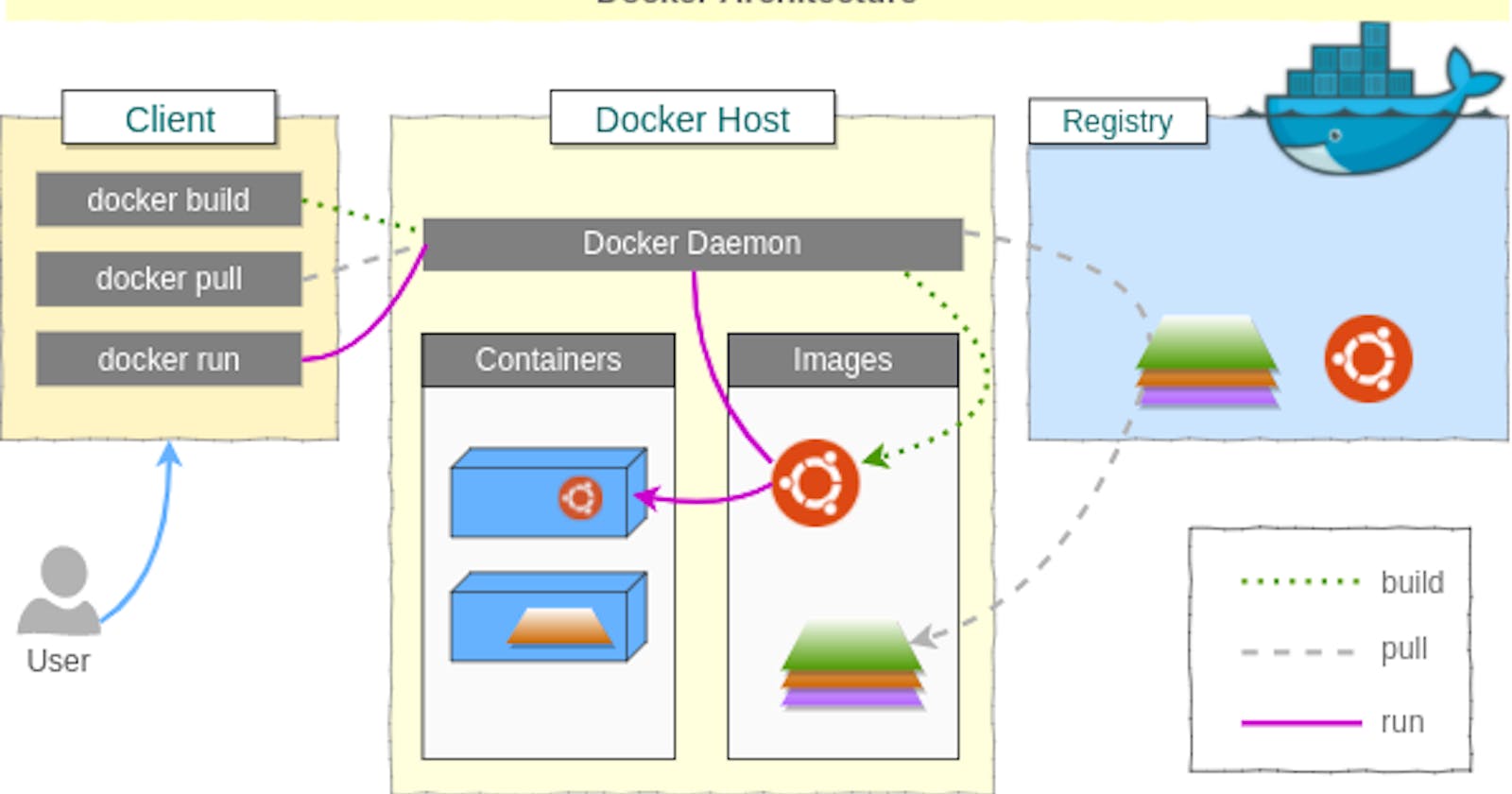

Docker Engine. Docker Engine is the core of Docker. It is the underlying client-server technology that creates and runs the containers. The Docker Engine includes a long-running daemon process called dockerd for managing containers, APIs that allow programs to communicate with the Docker daemon, and a command-line interface.

Docker Compose. Docker Compose is a command-line tool that uses YAML files to define and run multi-container Docker applications. It allows you to create, start, stop, and rebuild all the services from your configuration and view the status and log output of all running services.

Docker Desktop. All of these components are wrapped in Docker’s Desktop application, providing a user-friendly way to build and share containerized applications and microservices.

Research resources

Docker. Get started with a Docker overview. Docker Documentation. Retrieved from docs.docker.com/get-started/overview

TechTutorialSite. What is Docker and Container? TechTutorialSite. Retrieved from techtutorialsite.com/what-is-docker-and-con..